In its 2020 Cloud-Native Survey most recent report, The Cloud Native Computing Foundation (CNCF) found that the use of service mesh in production jumped 50% in the last year.

You can read about Service Mesh, the benefits of deploying a Service Mesh, and ISTIO architecture, here in the given links!

– The Benefits of Deploying a Service Mesh

– An Introduction to ISTIO Service Mesh & its Architecture!

With the popularization of microservices architectures, there has emerged the need of using a Service Mesh. The use of Service mesh in Kubernetes is the most thought-after step to overcome security and networking challenges obstructing Kubernetes deployment and container adoption.

Let’s read and explore more on Service Mesh, its architecture, components and popular solutions for service meshes, here in this article. Here, we’ll know talk about,

- Why do you need a Service Mesh?

- What is a Service Mesh?

- Components of a Service Mesh Architecture

- How does it work?

- Benefits of Deploying a Service Mesh

- Available Service Meshes

Why Do You Need a Service Mesh?

Services within the Microservices-based architecture being modular in nature are difficult to manage. Whenever there is a service call from one Microservice to another, it’s abstruse for the DevOps teams to infer or debug what’s happening inside the networked service calls.

This can pose serious issues if the problems are not detected at the right time and properly. Performance issues, security, load balancing problems, tracing the service calls, or proper observability of the service calls are some issues that might occur while handling Microservices. Furthermore, problems may get aggravated as many services are involved in the overall functioning of the Microservices-based applications.

What is a Service Mesh?

This is how a Service mesh such as an open-source project Istio service mesh can help resolve the issue. A service mesh is a configurable and dedicated infrastructure layer for managing service-to-service network communications within the cloud environment.

A service mesh controls and monitors how different parts of an application share data with one another. Unlike other systems present to manage this type of communication, a service mesh is a dedicated infrastructure layer that is built right into an application.

This visible infrastructure layer can document how well (or not) different parts of an app interact, so it becomes easier to optimize communication and avoid downtime as an app scales, ensuring communication among the services in the containerized infrastructure is fast, reliable, and secure.

Moreover, it can be used to implement features such as encryption, logging, tracing and load balancing, thereby improving the security, reliability and observability of the entire application.

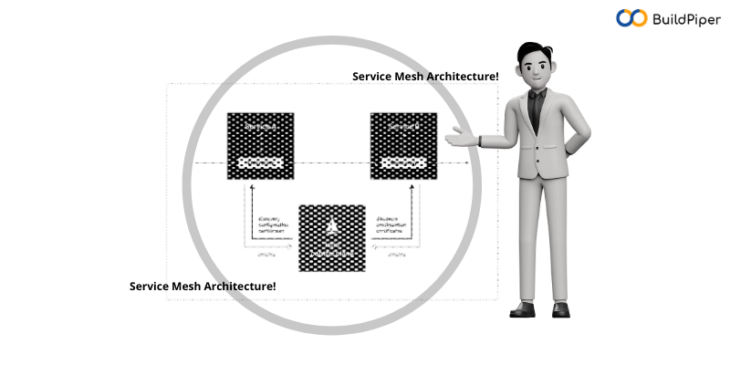

Components of a Service Mesh Architecture!

Here’s what a service mesh architecture looks like and what components it comprises. A service mesh consists of two elements,

– The Data Plane

– The Control Plane

While the data plane controls and manages the actual forwarding of the traffic, the control plane provides the configuration and coordination. Let’s take a quick look at these components of the service mesh architecture.

The Data Plane

In the service mesh architecture, the data plane refers to the network proxies. These network proxies are deployed alongside each instance of a service that needs to communicate with the other services in the system. All service calls moving to and from a service go through these proxies. The proxy then applies rules of authentication, authorization, encryption, rate-limiting and load balancing, handles service discovery, and implements logging and tracing.

The Control Plane

As seen above, the service mesh architecture needs a proxy for each instance of a service. In a microservice-based architecture that comprises hundreds of services, each service needs to be replicated as per the requirements. This means there would be an equal number of proxies that need to be taken care of and this is how the control plane comes into the picture.

The control plane within the service mesh architecture manifests an interface to the users so that they can configure the behavior of proxies within the data plane using policies and make that configuration available to other proxies via another API. But for this to happen, it’s important that each data plane must connect to the control plane for getting themselves registered and for receiving the configuration details.

Sidecar Proxies

Every call within the Microservices architecture goes through a proxy. This is why the service mesh adds an extra hop to every call. In order to minimize the additional latency, the proxy needs to be run on the same machine or in the same pod as the service, for which it was proxying so that they can communicate over the local host. This model is also known as sidecar deployment and hence named as “sidecar proxy”.

How does it work?

Here’s a service mesh in Kubernetes works. A service mesh is built into an app as an array of network proxies. To do this, a service mesh is built into an app as an array of network proxies. In a service mesh, the requests are routed in between the microservices through proxies in their own respective infrastructure layer. The sidecar proxies that run along with each service after being decoupled from each service together form a mesh network.

A service mesh extracts the application logic from the network communication logic. The service needs to know about its local proxy, after knowing which service mesh allows network communication and associated features to be implemented. With the help of the service mesh in Kubernetes, developers do not need to code the services with the communication logic. Since the logic governing the inter-service communication is in the sidecar proxy, it is easy to detect any failure in the services. These sidecars can manage tasks such as monitoring of microservices and much more.

Benefits of Deploying a Service Mesh!

As discussed earlier, a service mesh in kubernetes helps in resolving security and networking challenges while deploying Kubernetes. It provides complete visibility, resilience, traffic, and security control of services with little or no change to the existing code allowing developers to get free from the trouble of building new codes for addressing networking concerns. Deploying a service mesh in Kubernetes reduces the complexity associated with a microservice architecture and provides a lot of functionalities such as,

- Load balancing

- Service discovery

- Health monitoring

- Authentication

- Traffic management and routing

- Circuit breaking and failover policy

- Security

- Logging, Metrics and telemetry

- Fault injection

But, with benefits come complexities too. Implementing ISTIO can sometimes be challenging for the DevOps teams who are engaged with other critical development tasks. A Microservices management platform such as BuildPiper can handle these complexities. BuildPiper provides complete support for ISTIO installation in Kubernetes and ISTIO gateways ensuring a seamless, secure and compliant service deployment.

Available Service Meshes!

There are three popular solutions for service mesh being implemented in the cluster ecosystem. All of these are open source. Let’s discuss these solutions in detail.

Consul Connect

Consul Connect uses an agent which is installed on every node as a Daemon Set. This agent communicates with the Envoy sidecar proxies that handle routing and forwarding of traffic within the environment. Similar to Istio Service mesh, Consul Connect uses the Envoy proxy and the sidecar pattern.

Linkerd

Linkerd is a lightweight service mesh that can be placed on top of any existing platform. It can easily be installed and consists of simple CLI tools. Linkerd installation doesn’t need a platform admin. It uses RUST as a Proxy. This service mesh works with Kubernetes only.

Istio Service Mesh

Istio Service mesh is a Kubernetes-native solution. Automatic load balancing for HTTP, gRPC, WebSocket, and TCP traffic are some of the important features of Istio Service Mesh. It provides granular control of traffic behaviour and offers rich routing rules, retries, failovers, and fault injection. Istio Service mesh can be best used for Performance Debugging, Traffic Management (Load Balancing and Weighted Balance), and for security purposes.

Why ISTIO Service Mesh is the BEST of ALL!

As you can see, Istio Service Mesh has the most features and flexibility of any of these three service meshes by far. Istio offers a comprehensive traffic management ability with easy and hassle-free control and routing of ingress and egress traffic to the service mesh.

To provide easy and clear visibility of insights and observability metrics collected by the service mesh, Istio offers official integration with the Kiali management console.

Apart from the standard mutual TLS that ISTIO offers, Istio can easily be configured to accept or reject unencrypted traffic to preserve security. This is why ISTIO is one of the most preferred choices among different service meshes available in the market today.